For enterprises, chatbots such ChatGPT have the potential to automate mundane tasks or enhance complex communications, such as creating email sales campaigns, fixing computer code, or improving customer support.

Research firm Gartner predicts that by 2025, the market for AI software will reach almost $134.8 billion, and market growth is expected to accelerate from 14.4% in 2021 to 31.1% in 2025 — far outpacing overall growth in the software market.

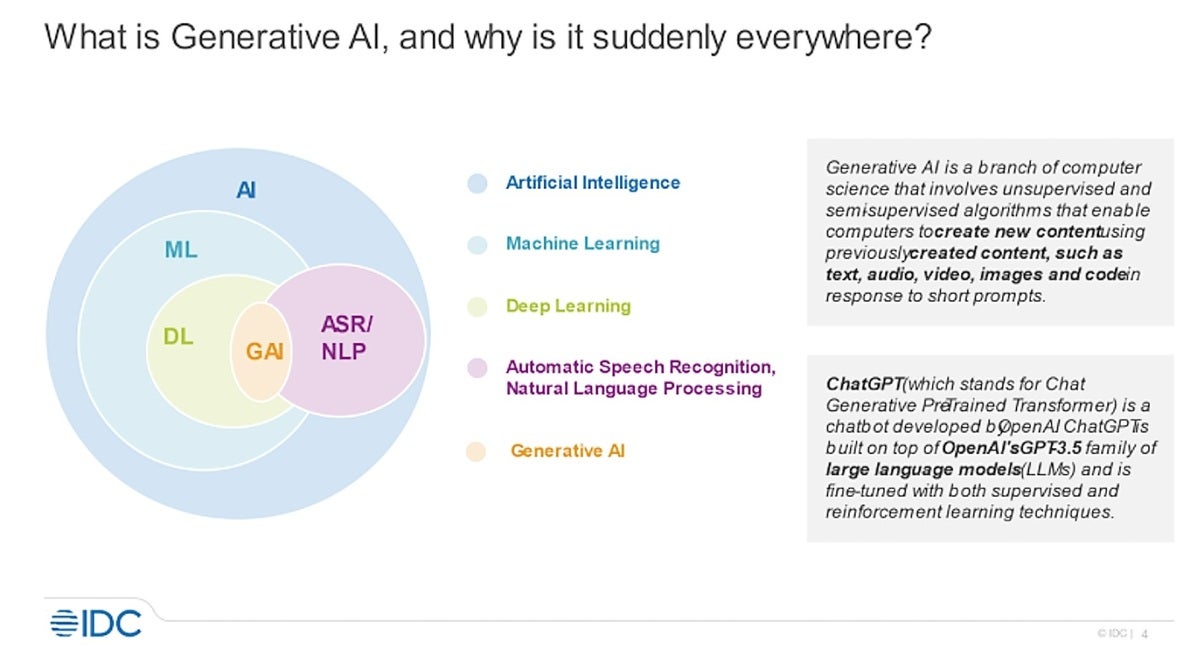

A large part of that market will be chatbot technology, which uses artificial intelligence (AI) and natural language processing to respond to user queries. The human-like answers are in the form of prose; more sophisticated programs allow for follow-up questions and responses, and they can be modified for specific business purposes.

IDC

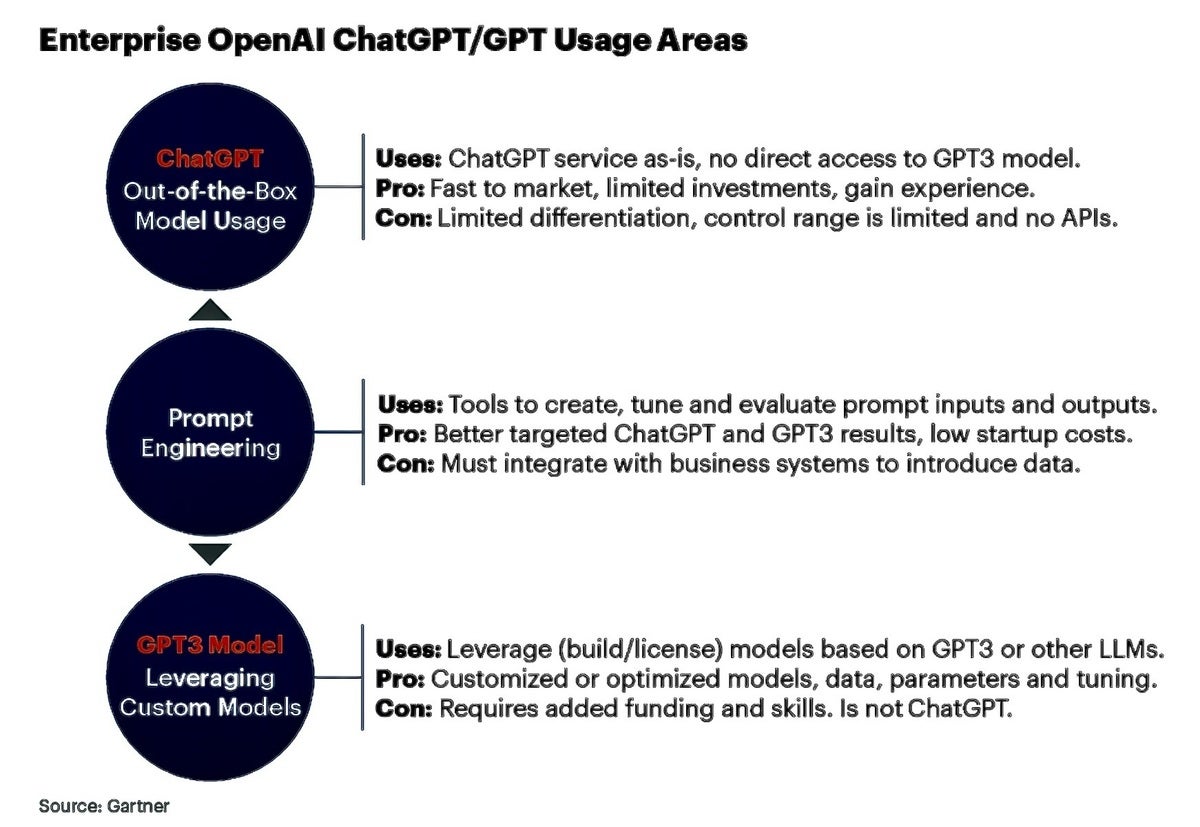

IDCIn a report last week, Gartner spelled out possible uses for ChatGPT and its base language model GPT-3 (GPT 3.5 and 4 also exist), which can be customized.

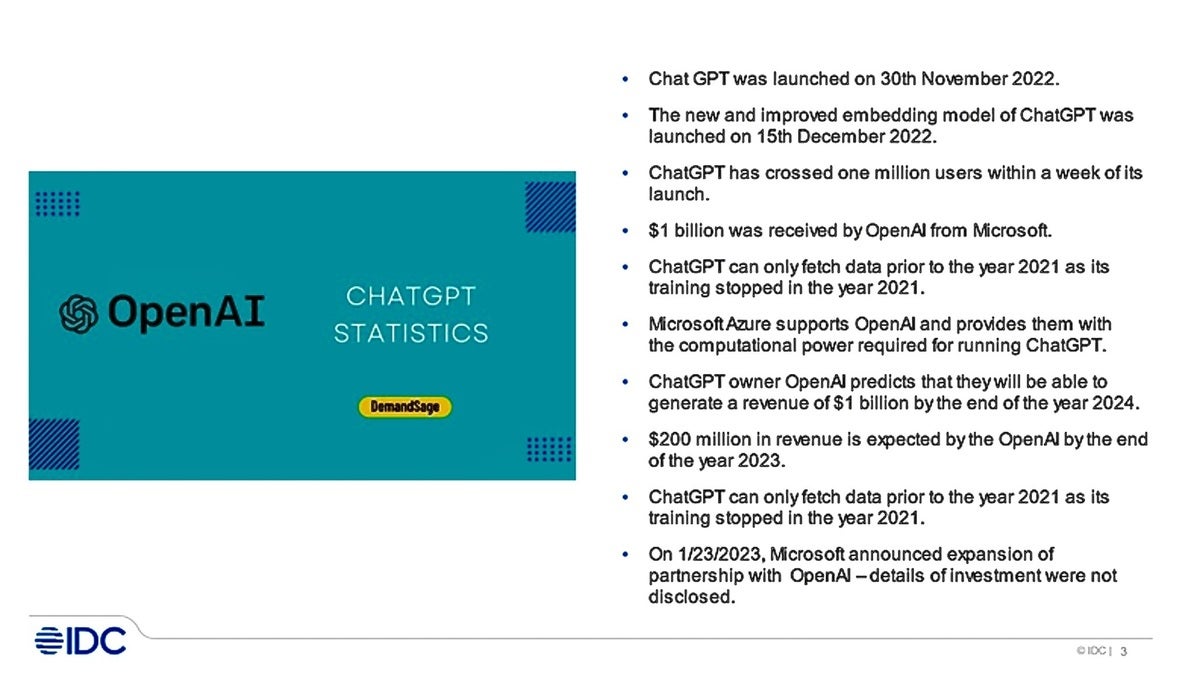

ChatGPT, launched by research lab OpenAI in November, immediately went viral and had 1 million users in just its first five days because of the sophisticated way it generates in-depth, human-like prose responses to queries.

It is most commonly used — for now — "out of the box" as a text-based web-chat interface. There is no API access for it at the moment, though GPT-3 does offer API access. (Microsoft also plans to offer APIs for its Azure OpenAI ChatGPT version, available soon.)

Enterprises can use the out-of-the-box approach to augment or create content, manipulate text in emails to soften language or take a particular tone, and to summarize or simplify content. “This can be done with limited investments,” Gartner explained in its report.

What's the difference between ChatGPT, GPT-3, and Azure OpenAI?

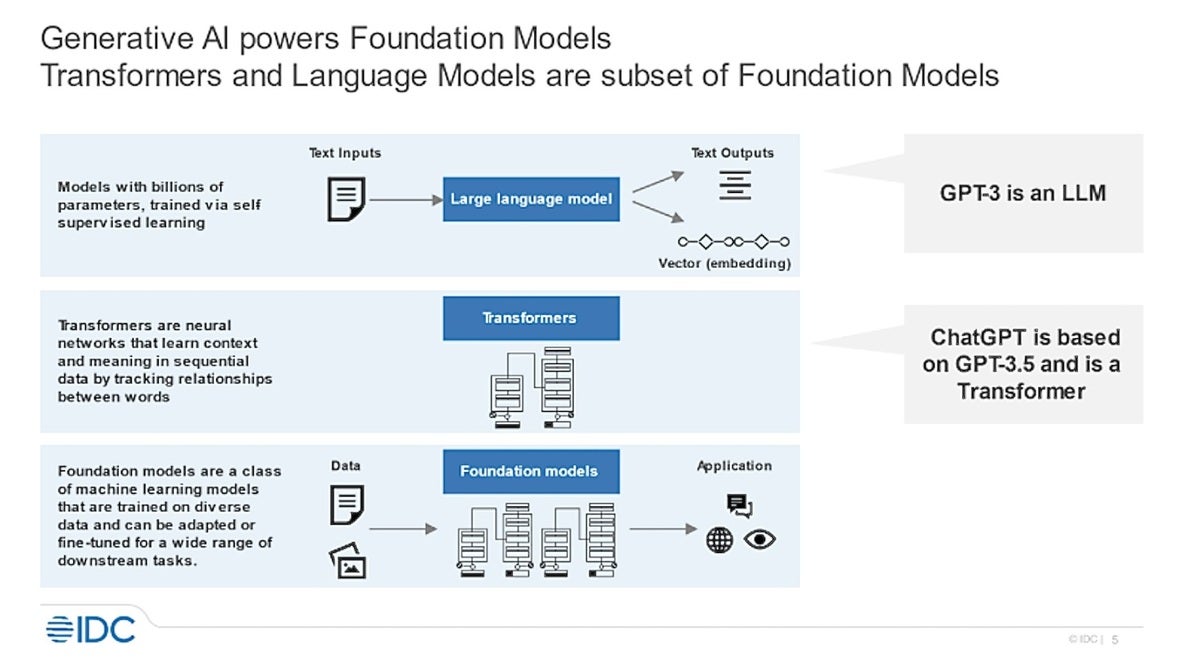

Both ChatGPT and GPT-3 (which stands for Generative Pre-trained Transformer) are machine learning language models trained by OpenAI, a San Francisco-based research lab and company. While both ChatGPT and GPT-3 can can produce human-like text responses to queries, they are not equal in sophistication.

One of the main differences between ChatGPT and GPT-3 is their size and capacity, according to a senior solutions architect with TripStax.

“ChatGPT is specifically designed for chatbot applications, while GPT-3 is more general-purpose and can be used for a wider range of tasks,” Muhammad A. wrote in a blog post. “This means that ChatGPT may be more effective for generating responses in a conversational context, while GPT-3 may be better suited for tasks such as language translation or content creation.”

IDC

IDCIt is not possible to customize ChatGPT, since the language model on which it is based cannot be accessed. Though its creator company is called OpenAI, ChatGPT is not an open-source software application. However, OpenAI has made the GPT-3 model, as well as other large language models (LLMs) available. LLMs are machine learning applications that can perform a number of natural language processing tasks.

“Because the underlying data is specific to the objectives, there is significantly more control over the process, possibly creating better results,” Gartner said. "Although this approach requires significant skills, data curation and funding, the emergence of a market for third-party, fit-for-purpose specialized models may make this option increasingly attractive."

For example, ChatGPT is leveraged by Microsoft's OpenAI Service, giving business and application developers a way to leverage the new technology. But Microsoft’s new and improved Bing search engine uses GPT-4 (OpenAI’s latest version).

ChatGPT is based on a smaller text model, with a capacity of around 117 million parameters. GPT-3, which was trained on a massive 45TB of text data, is significantly larger, with a capacity of 175 billion parameters, Muhammad noted.

ChatGPT is also not connected to the internet, and it can occasionally produce incorrect answers. It has limited knowledge of world events after 2021 and may also occasionally produce harmful instructions or biased content, according to an OpenAI FAQ.

“ChatGPT is an application made up of pre- and post-preparation and screening, and a customized version of GPT-3.5,” said Bern Elliot, a vice president and distinguished analyst with Gartner. “You submit questions along with any added information, called prompts. While you can’t access the customized GPT-3 in ChatGPT, the way you ask the question — the prompting — can have an important effect on the quality of the result.”

This is generally called “prompt engineering" and it can be done on any large language model. In many cases, users can also access an underlying LLM such as GPT-3.

ChatGPT and GPT-3 uses

Fundamentally, Gartner said, ChatGPT can be used to improve content creation and transformation automation while providing a fast and engaging user experience.

The simplest way to use ChatGPT is as a question-and-answer prompt. For example, “How many miles by car does it take to get from Boston to San Francisco?”

ChatGPT can also be used to create written content, or augment content already written to give it a different intonation, by softening or professionalizing the language.

“There are many ways ChatGPT can produce ‘draft’ text that meets the length and style desired, which can then be reviewed by the user,” Gartner said in its report. “Specific uses include drafts of marketing descriptions, letters of recommendation, essays, manuals or instructions, training guides, social media or news posts.”

“Creating email sales campaign material or suggesting answers to customer agents is reasonable usage,” Gartner's Elliot added.

IDC

IDCThe chatbot technology can also offer summaries of conversations, articles, emails, and web pages.

Another use for ChatGPT and GPT-3 is to improve existing customer service chatbots so they offer more detailed and human-like responses.

The platforms can also improve customer intent identification, summarize conversations, answer customer questions, and direct customers to resources. Doing this requires enterprise context, service descriptions, permissions, business logic, formality of tone, and even brand tone, which would need to be added to the GPT-3 language model.

Sales and marketing could also use ChatGPT and GPT-3 for potential customers on a website or via a chatbot to provide recommendations and product descriptions. Again, the chatbot platform would need to be customized with enterprise context.

Chatbots have also been used as personal assistants to manage schedules, summarize emails, compose emails and replies, and draft common documents.

In education, chatbots can be used to create personal learning experiences, as a tutor would. And, in healthcare, chatbots and applications can provide simple language descriptions of medical information and treatment recommendations.

Prompt engineering

The GPT-3 model inside of ChatGPT service cannot be modified on its own, Elliot explained, but users can get the base GPT-3 model and modify it separately for use in a chatbot engine (without the ChatGPT application). The GPT-3 model would simply be used like other LLMs.

For example, users can add data and tune parameters of the GTP-3 model or dataset. The way someone submits questions to those models can also be influenced by the wording used to ask the questions. “So again, prompt engineering can be useful,” Elliot said.

“Although this approach requires significant skills, data curation and funding, the emergence of a market for third-party fit-for-purpose specialized models may make this option increasingly attractive,” Gartner said in its report.

While just emerging, the use of ChatGPT and GPT-3 for software code generation, translation, explanation, and verification holds the promise of augmenting the development process. Its use is most likely in an integrated developer environment (IDE), according to Gartner.

Gartner

GartnerFor example, a developer could type into the search box: “This code is not working the way I expect — how do I fix it?” The first response isn’t likely to fix the problem, but with follow-up questions to the responses, ChatGPT could devise a solution, according to OpenAI.

ChatGPT can also write code from prose, convert code from one programming language to another, correct erroneous code, and explain code.

“Suggesting alternatives to software code or identifying coding errors is valid,” Elliot said. “But don’t let ChatGPT ‘fix’ code, but rather suggest areas to review.”

For example, OpenAI provided this example of a coding query and response on its FAQ page:

OpenAI

OpenAIChatbot risks

Gartner warned there are risks relying on ChatGPT because many users may not understand the data, security, and analytics limitations.

One of biggest concerns for business is that ChatGPT can go overboard, generating eloquent prose with answers in natural language that contain little content of value — or worse, untruthful statements, Gartner said. “It should be mandatory that users review the output for accuracy, appropriateness and actual usefulness before accepting any result."

Using a chatbot can also risk exposing confidential and personal identifiable information (PII), so it's important for companies to be mindful of what data is used to feed the chatbot and avoid including confidential information. It's also critical to work with vendors offering strong data usage and ownership policies.

OpenAI CEO Sam Altman warned users in a December tweet that ChatGPT is “incredibly limited,” saying it’s a mistake to be “relying on it for anything important right now.

“It’s a preview of progress; we have lots of work to do on robustness and truthfulness,” Altman wrote, adding that ChatGPT is best for “creative inspiration."

Gartner, in its report, essentially agreed: “Recognize that this is a very early stage and a hyped technology" with potentially signifcant uses. "So proceed, but don’t over pivot."

It suggested that companies encourage “out-of-the-box” thinking about work processes, define usage and governance guidelines around AI, and develop a task force — a manual/human reporting pipeline — to the CIO and CEO.

Elliot suggested that users favor Microsoft’s Azure Open Service ChatGPT over the OpenAI ChatGPT for the enterprise, “as Microsoft offers enterprise security and compliance controls associated with other Microsoft products.”

"If you plan to use confidential information, plan on using Azure," Elliot said.

(Microsoft has said it plans to enable security and confidentiality policies on Azure OpenAI as it does for other Azure services.)

Finally, don't allow employees to ask OpenAI ChatGPT questions that disclose confidential enterprise data, Elliot said. “Issue clear policies that educate employees on inherent ChatGPT related risks."

AI and search wars

Founded in 2015, OpenAI had the backing of investors such as Elon Musk, Amazon Web Services (AWS), Infosys, YC Research, and Altman, who became OpenAI CEO in 2019, the year the company went public.

Other early investors included Microsoft, which plowed $1 billion into OpenAI in 2019, and last Monday announced plans to make an additional multi-billion dollar investment. Microsoft also announced its Bing search engine is being upgraded using GPT-4, the latest version of the AI language model built by OpenAI.

That announcement started something of a search chatbot war between Microsoft and Google. Microsoft hopes its use of GPT-4 will give Bing a boost over Google’s long-dominant search engine. Google just announced its own flavor of chatbot technology called Bard. It is a conversational AI service powered by a technology called Language Model for Dialogue Applications (or LaMDA for short).

Preply, a global language learning platform, published the results of a study that compared the intelligence of Google to ChatGPT. Preply assembled what it called “a panel of communication experts” who assessed each AI platform on 40 intelligence challenges.

The challenge showed ChatGPT beating Google 23 to 16, with one tie. Google, however, excelled basic questions and queries where information changes over time.